Key Partners In AI Chip Design Are Literally Shaping The Future In Silicon Valley CA

Last week in Silicon Valley, there was a meeting of the minds in artificial intelligence and AI-driven chip design. Two industry juggernauts, Nvidia and Synopsys, held conferences that brought developers and tech innovators together in very different, but complementary ways. Nvidia, world renowned for its AI acceleration technologies and silicon platform dominance, and Synopsys, a long-standing industry bellwether in semiconductor design tools, IP and automation, are both capitalizing on the huge market opportunity that is now flourishing for machine learning and artificial intelligence.

Both companies launched a proverbial arsenal of enabling technologies, Nvidia leading the way with its massive AI accelerator chips and Synopsys enabling chip developers the ability to harness AI for many of the laborious steps of chip design and validation processes. In fact, we soon may reach not only an inflection point with AI, but perhaps a kind of “inception” point is on the horizon as well. In other words, sort of like the chicken and the egg, which came first, the AI or the AI chip? It’s the stuff of science fiction for many of you I’m sure, but let’s dig into a couple of what I feel were the highlights of this AI show of force last week.

Synopsys Harnesses AI For Semiconductor EDA In Three Dimensions

SYNOPSYS

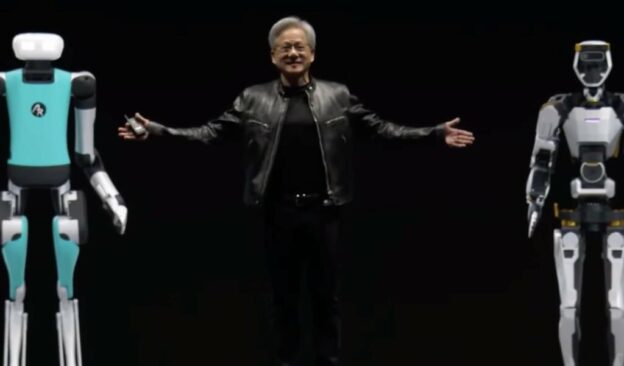

There’s no question, the folks at Synopsys stepped into a bit of Nvidia’s lime light with respect to the company’s announcements in AI accelerators, which are the de facto standard in the data center currently, at Nvidia’s GPU Technology Conference that took place earlier in the week. However, as Nvidia CEO Jensen Huang pointed out on stage with Synopsys CEO Sassine Ghazi (above), it’s for darn good reason. In short, virtually every chip Nvidia designs and sends to manufacturing is implemented using Synopsys EDA tools for design, verification and then porting to chip fab manufacturing. However, Ghazi’s keynote also touched on a new Synopsys technology called 3DSO.ai, and I was kind of blown away.

SYNOPSYS

Synopsys announced its Design Space Optimization AI tool back in 2021, which enabled a massive speed-up in the process of place and route, or floor planning a chip design. Finding optimal circuit layout and routing in large scale semiconductor designs is both labor-intensive and complex, often leaving performance, power efficiency and silicon cost on the table. Synopsys DSO.ai has the machine tirelessly work on this iterative process, eliminating many manhours of engineering time, speeding time to market with much more optimized chip designs.

Now, Synopsys 3DSO.ai takes this technology to the next level for modern 3D stacked chiplet solutions, with multiple layers of design automation for this new era of the chiplet, in addition to critical design thermal analysis as well. In essence, Synopsys 3DSO.ai is not only playing a sort of Tetris to optimize chip designs, but rather 3D Tetris now, optimizing place and route in three dimensions, and then providing thermal analysis of the design to ensure the physics of it are thermally feasible or optimal. So yeah, it’s like that. AI-powered chip design in 3D — mind officially blown.

NVIDIA Blackwell GPU AI Accelerator And Robotics Tech Were Showstoppers

NVIDIA

For its GPU Technology Conference, Nvidia once again pulled out all the stops, this time selling out the San Jose SAP Center, with a huge crowd of developers, press, analysts and even tech dignitaries like Michael Dell. My analyst biz partner and longtime friend, Marco Chiappetta, covered GTC highlights well here (check out AI NIMs too, very cool), but for me the stars of Jensen Huang’s show were the company’s new Blackwell GPU architecture for AI, Project GR00T for building humanoid robots and another AI-powered chip tool called cuLitho that is now being adopted by Nvidia and TSMC in production environments. The net-net on cuLitho is that designing costly chip mask sets, for patterning these designs on wafers in production, just got a much-needed shot in the arm from machine learning and AI. Nvidia claims its GPUs, in conjunction with its cuLitho models, can deliver up to a 40X lift in chip lithography performance, with a huge power savings over legacy CPU-based servers. And this technology, in partnership with Synopsys on the design and verification side of things, and TSMC on the manufacturing end, is now in full production.

NVIDIA

Which brings us to Blackwell. If you thought Nvidia’s Hopper H100 and H200 GPUs were monster AI silicon engines, then Nvidia’s Blackwell is kind of like “releasing the Kraken.” For reference, a single, dual die Blackwell GPU is comprised of some 208 billion transistors, more than 2.5X that of Nvidia’s Hopper architecture. However, those dual GPU clusters function as one massive GPU communicating over Nvidia’s NV-HB1 high bandwidth fabric that offers a blistering 10TB/s of throughput. Couple those GPUs with 192GB of HMB3e memory, with over 8TB/s of peak bandwidth, and we’re looking at double the memory of H100 along with double the bandwidth. Nvidia also co-joins a pair of Blackwell GPUs together with its Grace CPU for a trifecta AI solution it’s calling a Grace Blackwell Superchip, aka GB200.

NVIDIA

Configure a rack full of dual GB200 servers with the company’s 5th Gen NVLink technology, that offers 2X the throughput of Nvidia’s previous gen, and you have an Nvidia GB200 NVL72 AI Supercomputer. An NVL72 cluster configures up to 36 GB200 Superchips in this rack, connected via its NVLink spine on the backside. It’s a pretty wild design that’s also comprised of Nvidia BlueField 3 data processing units, and the company claims it’s 30X faster than its previous gen H100 based systems at trillion parameter Large Language Model inferencing. GB200 NVL72 is also claimed to offer 25X lower power consumption and 25X better TCO. The company is also configuring up to 8 racks in a DGX SuperPODs comprised of NVL72 Supercomputers. Nvidia announced a multitude of partners adopting Blackwell, including Amazon Web Services, Dell, Google, Meta, Microsoft, OpenAI and many others. The company is vowing to have these new powerful AI GPU solutions in market later this year.

So while Nvidia is not only leading the charge as the 800 lb gorilla of AI processing, it also appears the company is just getting warmed up.

NVIDIA

Another area Nvidia is continuing to accelerate its execution in is robotics, and Jensen Huang’s GTC 2024 robot show highlighting its Project Gr00T (that’s right with two zeros) Foundation Model for humanoid robots, was another wild ride. GR00T, which stands for Generalist Robot 00 Technology is all about training robots for not only natural language input and conversation, but also how to mimic human movements and actions for dexterity, navigating and adapting to a changing world around them. Like I said, science fiction, but Nvidia is apparently poised to make it reality sooner than later, with GR00T.

And really, that’s what impressed me the most from both Nvidia and Synopsys during my time in the valley last week. What previously seemed like nearly unsolvable problems and workloads, are now being solved and executed via machine learning at an ever-accelerating pace. And it’s having a compounding effect, such that bigger and bigger strides are being made year after year. I feel fortunate to be an observer and guide of sorts in this fascinating time of technology, and it’s what gets me up in the morning.

https://www.forbes.com/sites/davealtavilla/2024/03/27/nvidia-and-synopsys-punctuate-ai-chip-design-and-acceleration-leadership/amp/