Introduction, a recap of the AI stack & the evolution of neural nets

Most AI engineers and researchers these days do their coding in Python with either the PyTorch library or with Google’s newer JAX library. PyTorch is an open source framework originally developed by Meta, but which was donated in 2022 to the open source PyTorch foundation, making it part of the broader Linux foundation and community. With this move, Meta made it clear that PyTorch would remain a neutral platform for AI development, thereby encouraging further adoption by other tech firms.

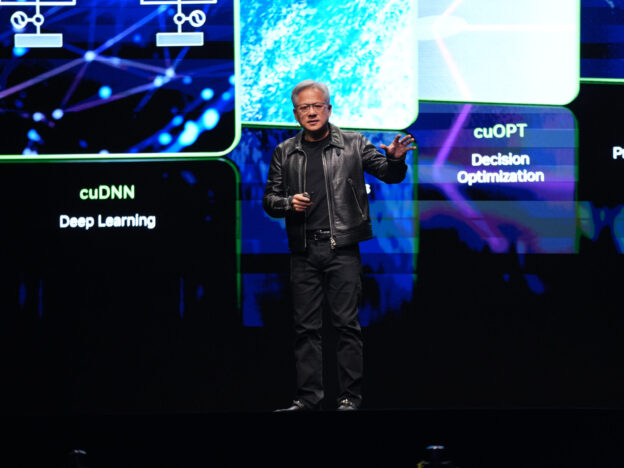

Under the hood, PyTorch runs on Nvidia’s CUDA. This is a computing platform first released by Nvidia in 2006 allowing for general purpose C code to run over the company’s GPUs. So basically how it works, is that Python is running underneath C code, which then with CUDA allows the code to be executed on an Nvidia GPU. C remains a popular coding language and one which most computer science graduates, and even self-taught developers, are familiar with. This masterstroke by Jensen Huang and Ian Buck made Nvidia GPUs available to run code from a wide programming community. Before CUDA, programming GPUs was a complex endeavor and remained the sole domain of specialized graphics programmers.

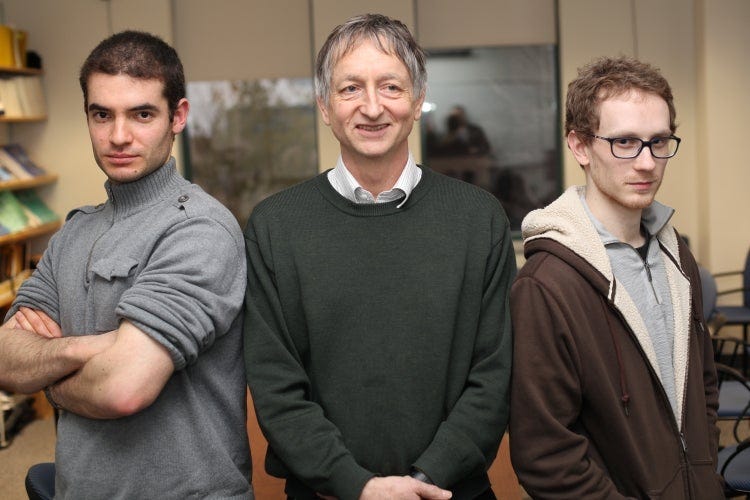

The CUDA computing platform was the breakthrough that allowed the scientific community to start leveraging GPUs for compute-heavy research. Due to the chips’ design to massively parallelize mathematical operations, GPUs are extremely well suited to handle matrix operations in the field of linear algebra. As these matrix operations form the backbone of machine learning (AI), the next breakthrough came in 2012 when a team including Ilya Sutskever and Geoffrey Hinton managed to train a neural net on a GPU. The trained AlexNet contained 60 million parameters and 650,000 neurons, allowing for images to be classified across 1,000 categories. So basically it could do cool things such as recognize a cat in an image.

While the core algorithms of neural nets had already been developed by academics in the decades before, up until that point, these had been of no real practical use as the computing power simply wasn’t available to train the theorized models. As from 2012, suddenly the ingredients were in place for a Cambrian explosion in the field of machine learning. Firstly, due to the decades of gamers buying GPUs, Nvidia had been able to develop the horse power to run these compute heavy operations. And secondly, the widespread use of the internet and in particular social media had resulted in vast amounts of available data to train the algorithms on. The basic equation is that Nvidia GPUs plus large amounts of quality data and plus machine learning mathematics results in what Jensen Huang describes as ‘intelligence factories’.

This set-up resulted in a incredibly fast pace of innovation in the field of AI as from 2012 onwards with one breakthrough after the other, and in a large variety of machine learning architectures such as deep neural nets, convolutional neural nets and recurrent neural nets. And these could further be improved with reinforcement learning. For example, both AlphaGo, which defeated the Go world champion Lee Sedol in a series of five matches, as well as the nobel-prize winning AlphaFold, which predicts a protein’s 3D structure based on its molecular code, are deep neural nets developed with the use of reinforcement learning.

The last major breakthrough was adding attention mechanisms to these deep neural nets with the help of the transformer architecture. This allowed for relationships in vast amounts of data to be captured. While the generalization capabilities of these transformer-based neural nets are impressive, under the hood we’re still largely running plain old matrix operations and thus CUDA.

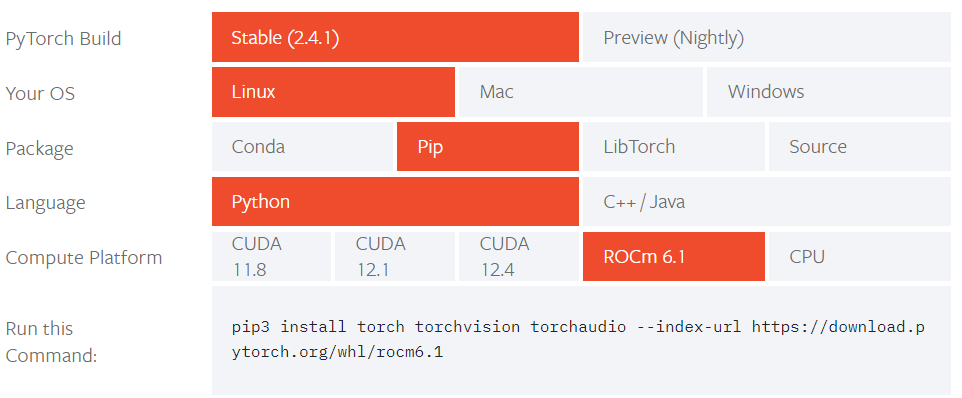

In the meanwhile, both the AI community and the semiconductor industry are busy at work to provide alternatives to Nvidia’s hardware, which has turned out to be a real bottleneck in terms of availability over the last few years. One such innovation to provide more hardware abstraction for AI engineers has been developed by the Pytorch foundation. For example, if you install PyTorch on a Linux machine—which is the most extensively used operating system in the cloud—you can now execute your Python code over AMD’s competing ROCm computing platform, and thereby AMD’s GPUs:

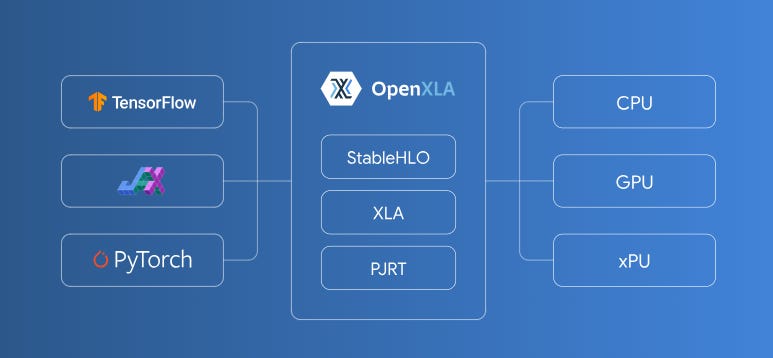

While Google’s more recent JAX library has been gaining steam as well. And also this Python-based library is allowing for a diversification of accelerator hardware underneath. So engineers can both run JAX over Nvidia’s CUDA, but also over Google’s internally developed TPUs thanks to the XLA platform.

XLA, i.e. Accelerated Linear Algebra, is the open-source answer to Nvidia’s CUDA. This platform allow for code from the three main Python machine learning libraries i.e. PyTorch, JAX and TensorFlow, to be compiled to a variety of underlying hardware. At the same time, XLA gives developers more granular control about how AI code should be executed on the hardware with the help of custom C++ calls. This gives engineers access to lower level capabilities like they have with CUDA. Pytorch on the other is purely a higher level language, having limited abilities to work close to the hardware, but offering coders user-friendliness in return.

For premium subscribers, we’ll go much deeper into current developments in the world of AI. We’ll talk to a range of engineers, directors and scientists at leading companies such as Google, Microsoft, Apple, Nvidia, Amazon, Bloomberg and Qualcomm, as well as LLM engineers from startups to cover the following topics:

- Current and future innovations in the AI hardware and software stack, and how this will impact the power dynamics across the AI value chain. Not only between Nvidia and its competitors such as AMD, but also with its customers such as the various hyperscalers. We’ll discuss developments at Google, Microsoft and Amazon in particular.

- We’ll detail how the cloud CPU market is currently further commoditizing, an important topic for both Intel and AMD investors, and we’ll explain under which circumstances the same can happen in the cloud GPU market.

- OpenAI’s plan to disrupt Nvidia, and Nvidia’s defenses to the various threats.

- Nvidia’s special relationship with new AI clouds such as CoreWeave and Lambda Labs, and how this led to the multi-billion dollar deal between Microsoft and CoreWeave.

- The outlook for AI demand, and a detailed analysis of Nvidia’s financials and valuation.

https://www.techinvestments.io/p/the-battle-for-the-ai-stack-nvidia