Today, we are releasing our tour of the xAI Colossus Supercomputer. For those who have heard stories of Elon Musk’s xAI building a giant AI supercomputer in Memphis, this is that cluster. With 100,000 NVIDIA H100 GPUs, this multi-billion-dollar AI cluster is notable not just for its size but also for the speed at which it was built. In only 122 days, the teams built this giant cluster. Today, we get to show you inside the building.

Of course, we have a video for this one that you can find on X or on YouTube:

Normally, on STH, we do everything entirely independently. This was different. Supermicro is sponsoring this because it is easily the most costly piece for us to do this year. Also, some things will be blurred out, or I will be intentionally vague due to the sensitivity behind building the largest AI cluster in the world. We received special approval by Elon Musk and his team in order to show this.

Supermicro Liquid Cooled Racks at xAI

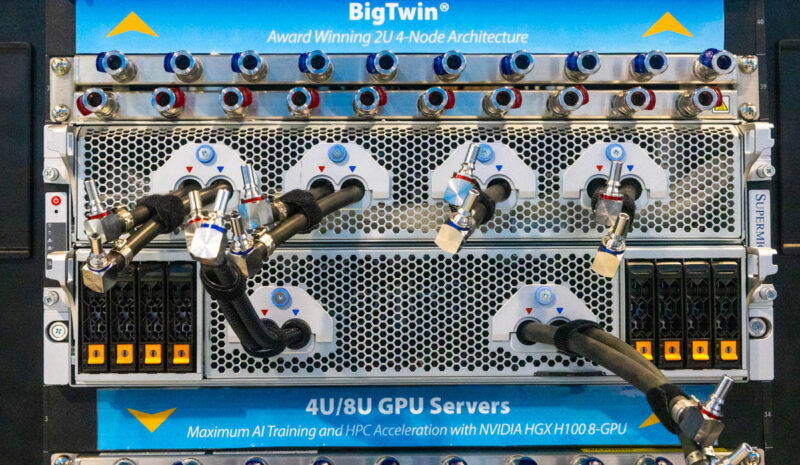

The basic building block for Colossus is the Supermicro liquid-cooled rack. This comprises eight 4U servers each with eight NVIDIA H100’s for a total of 64 GPUs per rack. Eight of these GPU servers plus a Supermicro Coolant Distribution Unit (CDU) and associated hardware make up one of the GPU compute racks.

These racks are arranged in groups of eight for 512 GPUs, plus networking to provide mini clusters within the much larger system.

Here, xAI is using the Supermicro 4U Universal GPU system. These are the most advanced AI servers on the market right now, for a few reasons. One is the degree of liquid cooling. The other is how serviceable they are.

We first saw the prototype for these systems at Supercomputing 2023 (SC23) in Denver about a year ago. We were not able to open one of these systems in Memphis because they were busy running training jobs while we were there. One example of this is how the system is on trays that are serviceable without removing systems from the rack. The 1U rack manifold helps usher in cool liquid and out warmed liquid for each system. Quick disconnects make it fast to get the liquid cooling out of the way, and we showed last year how these can be removed and installed one-handed. Once these are removed, the trays can be pulled out for service.

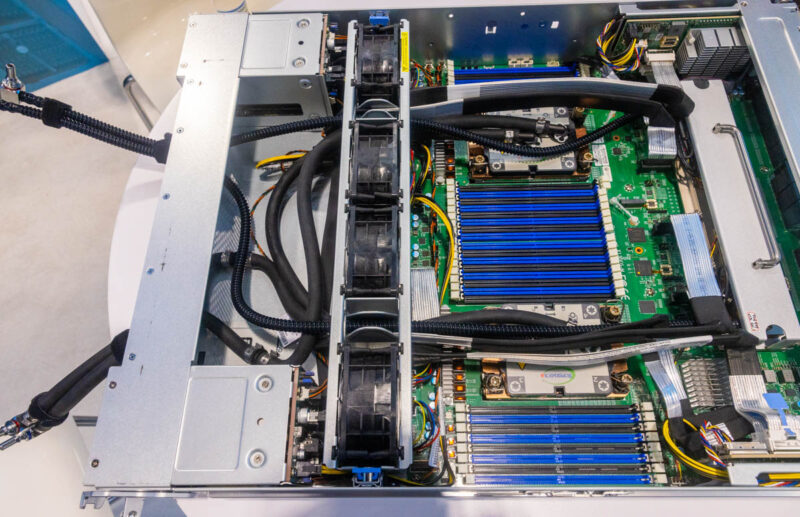

Luckily, we have images of the prototype for this server so we can show you what is inside these systems. Aside from the 8 GPU NVIDIA HGX tray that uses custom Supermicro liquid cooling blocks, the CPU tray shows why these are a next-level design that is unmatched in the industry.

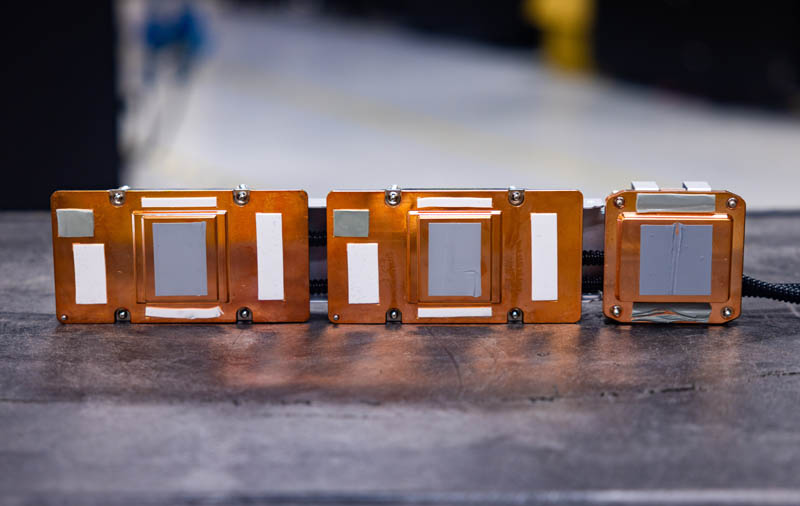

The two x86 CPU liquid cooling blocks in the SC23 prototype above are fairly common. What is unique is on the right-hand side. Supermicro’s motherboard integrates the four Broadcom PCIe switches used in almost every HGX AI server today instead of putting them on a separate board. Supermicro then has a custom liquid cooling block to cool these four PCIe switches. Other AI servers in the industry are built, and then liquid cooling is added to an air-cooled design. Supermicro’s design is from the ground up to be liquid-cooled, and all from one vendor.

It is analogous to cars, where some are designed to be gas-powered first, and then an EV powertrain is fitted to the chassis, versus EVs that are designed from the ground up to be EVs. This Supermicro system is the latter, while other HGX H100 systems are the former. We have had hands-on time with most of the public HGX H100/H200 platforms since they launched, and some of the hyper-scale designs. Make no mistake, there is a big gap in this Supermicro system and others, including some of Supermicro’s other designs that can be liquid or air cooled that we have reviewed previously.

Inside the 100K GPU xAI Colossus Cluster that Supermicro Helped Build for Elon Musk