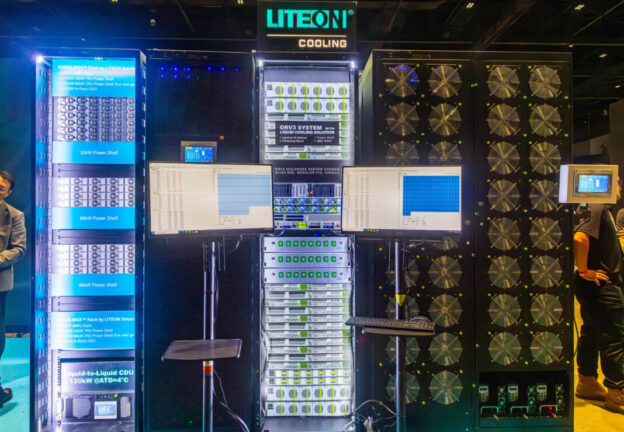

At OCP Summit 2024, the ORv3 rack was a hot topic. NVIDIA took the base ORv3 design and built an MGX AI rack for its high-end AI servers with a few upgrades. LITEON showed up at the OCP Summit this year with a clear message for OEMs, hyper-scalers, and more: Bring your nodes and switches. LITEON has a liquid-cooled rack with power, monitoring, and even CDUs and heat exchangers for high-end AI systems like the NVIDIA GB200 NVL72.

Here is a quick short on the racks:

LITEON took time to show us around their booth, taking special time for STH. So we have to say this is sponsored.

LITEON Shows NVIDIA GB200 NVL72 Rack at OCP Summit 2024

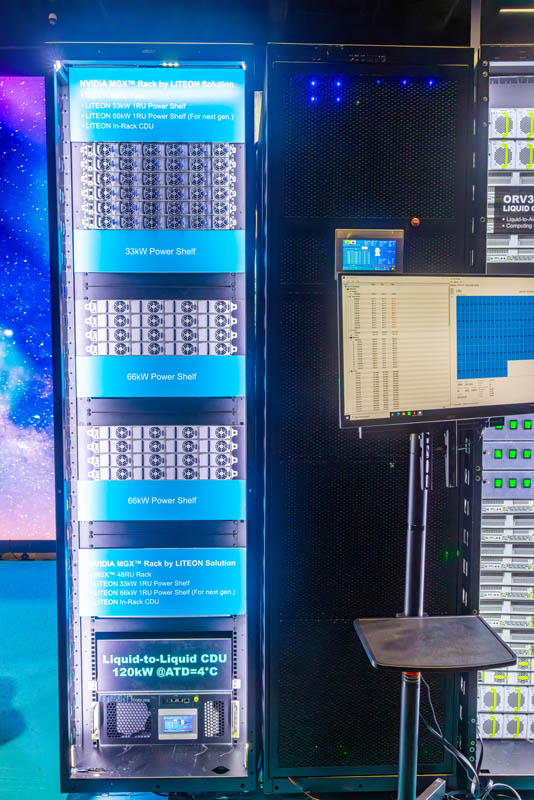

At OCP Summit 2024, this solution caught our eye. There was a NVIDIA MGX 48U rack from LITEON with the 33kW 1U power shelves for this generation.

Moving to the back, was perhaps more interesting. LITEON is selling entire racks with power delivery via the busbar in the rear. For those unfamiliar with the ORv3, this is the latest generation OCP rack, but with a big twist. Instead of the OCP racks we have seen in the past focused on a customer, or a handful of customers, NVIDIA’s focus on building around the ORv3 rack for its GB200 NVL72 means that there is interest in the rack not just from hyper-scalers, but also from many other companies.

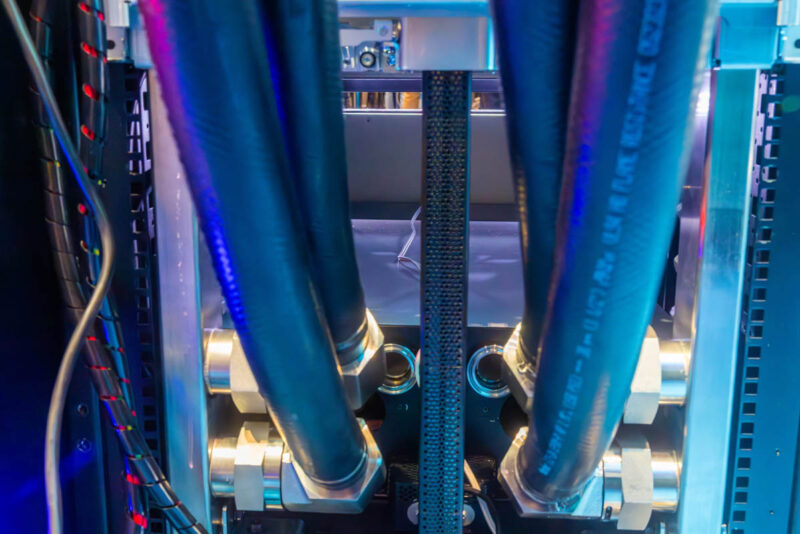

There are two key innovations here. First is that busbar for power. Instead of each server having its own individual pair of power supplies, AC power is converted to 48V DC (in the current generation) and then distributed to servers in the rack via a busbar that is engaged when the server is slid into place. The second main innovation is that there are also blind mate liquid cooling nozzles at the back of the rack. As that server is slid into the rack, it can also tap into the rack’s liquid cooling loop. Instead of needing to connect each server individually, this mating of the liquid cooling and power saves time when servicing the systems.

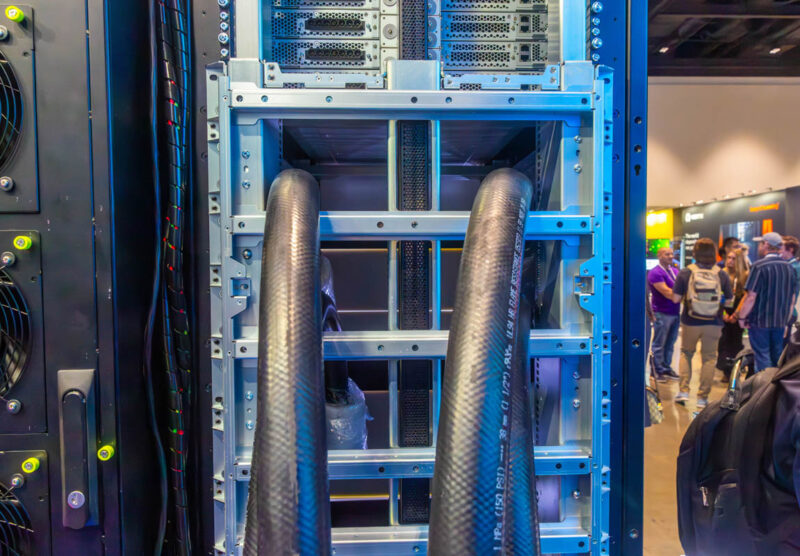

On the back of the rack, we can see our MGX frame for the NVL72 deployment, as well as some large liquid cooling hoses.

Going to the bottom of the rack, we can see these hoses connect to the rack manifolds on either side of the rack. Liquid is pumped through these manifolds to feed the servers and switches placed in the rack.

Still, all of the heat has to go somewhere after it is removed from the AI servers and switches. One option LITEON showed was a 140kW sidecar. We were not allowed to show you inside of this, but imagine that behind the fans you see there is a big radiator. Heat can be quickly removed from the AI rack via liquid that is then uses big heat exchangers and fans to push air into the data center where air handlers will remove heat from the data center.

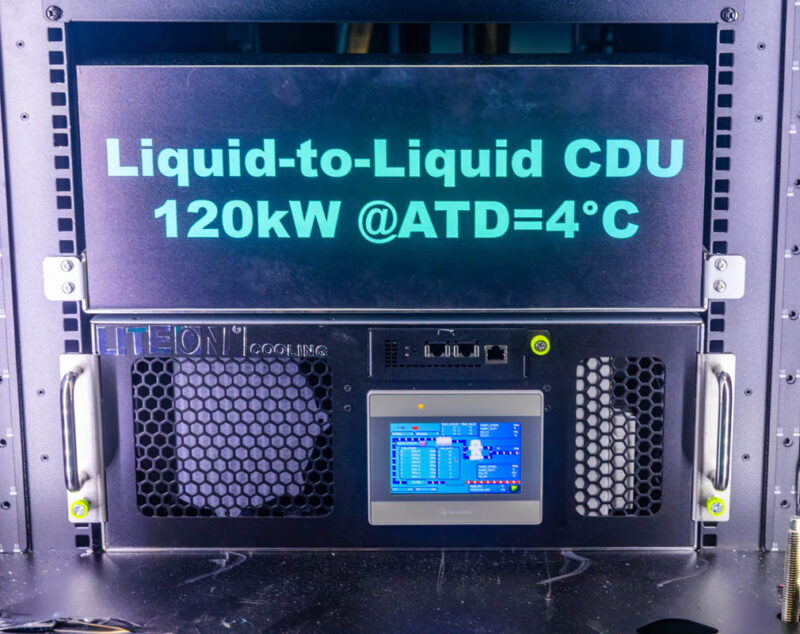

LITEON alternatively has its 120kW CDU. This is the company’s custom unit that is designed to transfer heat from the in-rack loop to a facility water loop directly.

As you can see, there is a management interface on this one, that we wanted to touch on next.