Microsoft Azure has successfully deployed and activated servers powered by NVIDIA’s GB200 AI processors, marking a significant milestone in artificial intelligence infrastructure,

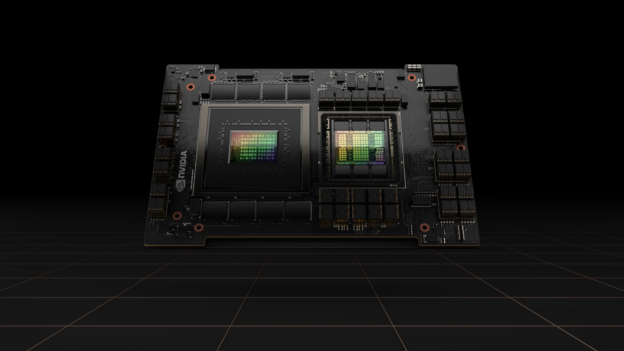

Microsoft Azure has become the first cloud service provider to deploy and activate servers powered by NVIDIA’s GB200 AI processors. In a post on X, Azure displayed their custom NVIDIA GB200 AI server racks and added, “We’re optimizing at every layer to power the world’s most advanced AI models, leveraging Infiniband networking and innovative closed-loop liquid cooling.”

Microsoft’s implementation features custom-designed server racks, each housing an estimated 32 B200 processors. Azure has developed an advanced closed-loop liquid cooling system to manage the immense heat generated by these high-performance units. This setup showcases Microsoft’s commitment to pushing the boundaries of AI computing and expertise in thermal management for next-generation hardware.

” alt=”” aria-hidden=”true” />

The Blackwell B200 GPU represents a substantial improvement in AI processing capabilities. Compared to its predecessor, the H100, the B200 offers:

- 2.5x higher performance for FP8/INT8 operations (4,500 TFLOPS/TOPS vs 1,980 TOPS)

- 9 PFLOPS of performance using the FP4 data format

These advancements will allow for the training of complex large language models (LLMs) and potentially revolutionize AI applications across various industries.

Microsoft CEO Satya Nadella emphasized the company’s ongoing collaboration with NVIDIA in another post on X, stating, “Our long-standing partnership with NVIDIA and deep innovation continues to lead the industry, powering the most sophisticated AI workloads.”

While the current deployment appears to be for testing purposes, it signals Microsoft’s readiness to offer Blackwell-based servers for commercial workloads soon. Additional details about the Blackwell server offering are expected at Microsoft’s upcoming Ignite conference in Chicago, scheduled for November 18-22, 2024.

As the first cloud provider to operationalize NVIDIA Blackwell systems, Microsoft Azure has positioned itself as a leader in AI infrastructure. This move will likely accelerate the development of more advanced AI models and applications, potentially transforming various sectors that rely on cloud-based AI services.

The successful implementation of these high-density, high-performance computing systems also sets a new standard for data center design and cooling technologies. As more providers adopt similar technologies, we may witness a shift in the construction and operation of data centers to meet the growing demands of AI workloads.

Deployment of Blackwell server installations is expected to ramp up in late 2024 or early 2025, setting the stage for another evolution in the AI landscape. Microsoft’s early adoption may give it a competitive edge in attracting AI researchers and enterprises looking to leverage the most advanced computing resources available.

https://www.storagereview.com/news/microsoft-azure-pioneers-nvidia-blackwell-technology-with-custom-server-racks?amp