With reference designs derived from the existing Nvidia Cloud Partner reference architecture, Nvidia right-sizes for enterprise customers.

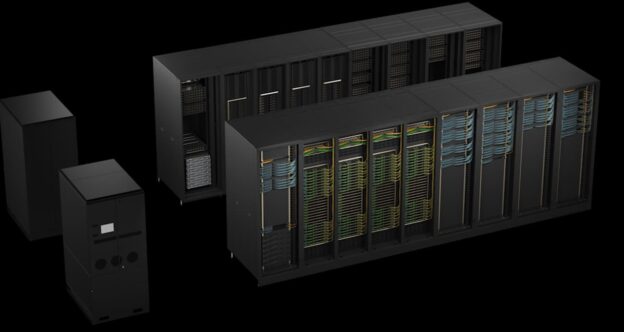

After the release around the OCP Summit of a number of AI reference architectures from vendors who worked with Nvidia to develop the infrastructure hardware necessary to deploy Nvidia AI hardware at scale, Nvidia has now announced their own take on a reference architecture for enterprise scale customers, built completely using Nvidia servers and networking technologies.

Optimizing from Design to Deployment

The newly released reference architectures (RA) offering is for enterprise class hardware at deployments ranging from 32 to 1,024 GPUs, and is derived from the existing Nvidia Cloud Partner (NCP) design, which is focused on deployments of 128 to over 16,000 nodes.

While the NCP architecture is designed to be a guide for customers working with the professional services offered by Nvidia, the new enterprise class RAs are being used by Nvidia-partner hardware vendors, such as HPE, Dell, and Supermicro to develop Nvidia-certified solutions using NVIDIA Hopper GPUs, Blackwell GPUs, Grace CPUs, Spectrum-X networking, and BlueFieldDPU architectures.

Each of these technologies has an existing, node-level, certification program which allows the partner vendors to deliver systems that are optimized for the services their customers are looking to develop. But just using certified components is not sufficient for vendors to release a certified solution.

The certified server configuration is subject to a testing program which includes thermal analysis, mechanical stress tests, power consumption evaluations, and signal integrity assessments as well as passing a set of operational testing requirements which include management capabilities, security, and networking performance, across a range of workloads, applications, and use cases.

Once a certified selection is chosen, Nvidia’s RA guides also offer guidance on building clusters, using their nodes, and following common design patterns.

As announced, each of the enterprise reference architectures has certain factors in common, including recommendations for:

- Accelerated infrastructure using optimized Nvidia-certified server configurations.

- AI-optimized networking based on Spectrum-X Ethernet and Blufield-3 DPUs.

- Nvidia AI enterprise software platform for production AI deployments.

Scale-out vs. Scale-up

Understanding the nature of customer demand, the Nvidia RAs support both scale-up and scale-out deployments, based on the customer needs and long-term planning.

In the simplest terms, a scale-up deployment uses NVLink connections to create a high-bandwidth, multi-node GPU cluster which can be treated a a single, huge, GPU with the workloads distributed across multiple servers.

The systems can scale up to the maximum capability of the NVLink connection, with the example being given that he GB200 NVL72 can scale up to 72 GPUs, functioning as a single unit.

In the scale-out model, as described in the RA outline, the approach uses optimized PCIe connections to scale clusters, which, depending on the architectural design chosen, can scale from four to 96 clsuter nodes. The workload is distributed to servers which can have different levels of optimation and performance.

Benefits of Choosing the RA Designs and Certified Vendors

Overall, the selection of systems built using these reference architecture designs means getting the benefits of the years of engineering expertise and experience that Nvidia has acquired in building large scale systems. Nvidia breaks the benefits down into those that apply to IT and business operations.

For IT, making use of these designs results in higher performance, easier support, reduced complexity and improved TCO, and ease in scaling and managing the deployments.

For the business concerns, Nvidia highlights reduced costs, better efficiency, accelerated time to market and significant risk mitigation.

The guidance provided is designed to accelerate the transition of data centers from traditional working models to AI factories.

Vendors making use of the RAs get the benefits of Nvidia’s experience working with their hardware and software solutions while customers choosing to deploy the certified solutions are assured of minimal roadblocks in their path to a rapid and effective deployment.

https://www.datacenterfrontier.com/machine-learning/article/55239363/nvidia-releases-ai-reference-architectures-for-enterprise-class-hardware