Humanoid robots present a multifaceted challenge at the intersection of mechatronics, control theory, and AI. The dynamics and control of humanoid robots are complex, requiring advanced tools, techniques, and algorithms to maintain balance during locomotion and manipulation tasks. Collecting robot data and integrating sensors also pose significant challenges, as humanoid robots require a fusion of sophisticated sensors and high-resolution cameras to perceive the environment effectively and reason how to interact with surroundings in real-time. The computational demands for real-time processing of sensory data and decision-making also necessitate powerful onboard computers.

Developing technologies, tools, and robot foundation models that can enable adaptive robot behavior, and facilitate natural human-robot interaction, remains an ongoing research focus. NVIDIA Project GR00T is an active research initiative to enable the humanoid robot ecosystem of builders to accelerate these next-generation advanced robot development efforts. In this post, we will discuss the new GR00T workflows for humanoid development, including:

- GR00T-Gen for diverse environment generation

- GR00T-Mimic for robot motion and trajectory generation

- GR00T-Dexterity for fine-grained and dexterous manipulation

- GR00T-Mobility for locomotion and navigation

- GR00T-Control for whole-body control (WBC)

- GR00T-Perception for multimodal sensing

GR00T-Gen for diverse environment generation

GR00T-Gen is a workflow to generate robot tasks and simulation-ready environments in OpenUSD for training generalist robots to perform manipulation, locomotion, and navigation.

For robust robot learning, it is important to train in diverse environments with a variety of objects and scenes. Generating a large variety of environments in the real world is often expensive, time-consuming, and not accessible to most developers, making simulation a compelling alternative.

GR00T-Gen provides realistic and diverse human-centric environments, created using large language models (LLMs) and 3D generative AI models. It features over 2,500 3D assets spanning more than 150 object categories. To create visually diverse scenes, multiple textures are included for domain randomization in simulation. Domain randomization enables trained models and policies to generalize effectively when deployed in the real world.

GR00T-Gen provides cross-embodiment support for mobile manipulators and humanoid robots and includes over 100 tasks like opening doors, pressing buttons, and navigation.

GR00T-Mimic for robot motion and trajectory generation

GR00T-Mimic is a robust workflow to generate motion data from teleoperated demonstrations for imitation learning. Imitation learning is an approach to training robots where robots acquire skills through observation and replication of actions demonstrated by a teacher. A critical component of this training process is the volume and quality of demonstration data available.

For humanoid robots to effectively and safely navigate human-centric environments, it is important that their “teachers” are human demonstrators, allowing robots to learn by mimicking human behavior. However, a significant challenge arises due to the scarcity of existing high-quality training data.

To address this, there is a need to develop extensive datasets that capture human actions. One promising method for generating this data is through teleoperation, where a human operator remotely controls a robot to demonstrate specific tasks. While teleoperation can produce high-fidelity demonstration data, it is constrained by the number of individuals who can access these systems at a given time.

GR00T-Mimic aims to scale up the data collection pipeline. The approach involves gathering a limited number of human demonstrations in the physical world using extended reality (XR) and spatial computing devices like the Apple Vision Pro. These initial demonstrations are then used to generate synthetic motion data, effectively scaling up the demonstration dataset. The goal is to create a comprehensive repository of human actions for robots to learn from, thus enhancing their ability to perform tasks in real-world settings.

To further support GR00T-Mimic, NVIDIA Research also released SkillMimicGen, a fundamental first step to solve real-world manipulation tasks with minimal human demonstrations.

GR00T-Dexterity for fine-grained and dexterous manipulation

GR00T-Dexterity is a suite of models and policies for fine-grained dexterous manipulation and reference workflows to develop them.

Traditional robotics grasping requires the integration of multiple complex components, from identifying grasp points to planning motions and controlling fingers. For robots with many actuators, managing these systems—especially using state machines to handle failures like missed grasps—makes end-to-end grasping a significant challenge.

GR00T-Dexterity introduces a workflow that leverages the research paper DextrAH-G. It is a reinforcement learning (RL)-based approach to policy development for robot dexterity. This workflow enables the creation of an end-to-end, pixels-to-action grasping system, trained in simulation and deployable to a physical robot. The workflow is designed to produce policies capable of fast, reactive grasping with depth stream input, and is generalizable to new objects.

The process involves creating a geometric fabric to define the robot’s motion space and simplify grasping actions, optimized for parallelized training. Using NVIDIA Isaac Lab, a fabric-guided policy is trained by applying reinforcement learning across multiple GPUs to generalize grasping behaviors. Finally, the learned policy is distilled into a real-world-ready version using depth input through imitation learning, producing a robust policy within a few hours.

Note that the GR00T-Dexterity workflow preview is based on the NVIDIA research paper, DextrAH-G: Pixels-to-Action Dexterous Arm-Hand Grasping with Geometric Fabrics and has been migrated from NVIDIA Isaac Gym (deprecated) to Isaac Lab. If you’re a current Isaac Gym user, follow the tutorials and migration guide to get started with Isaac Lab.

GR00T-Mobility for locomotion and navigation

GR00T-Mobility is a suite of models and policies for locomotion and navigation and reference workflows to develop them.

Classical navigation methods struggle in cluttered environments and demand extensive tuning, while learning-based approaches face challenges in generalizing to new environments.

GR00T-Mobility introduces a novel workflow built on RL and imitation learning (IL) supported in Isaac Lab, designed to create a mobility generalist for navigation across varied settings and embodiments.

By leveraging world modeling using NVIDIA Isaac Sim, this workflow generates a rich latent representation of environment dynamics, enabling more adaptable training. The workflow decouples world modeling from action policy learning and RL fine-tuning, thereby enhancing flexibility, supporting diverse data sources for greater generalization.

A model trained (using this workflow) solely on photorealistic synthetic datasets from Isaac Sim enables zero-shot sim-to-real transfer and can be applied to a range of embodiments, including differential drive, Ackermann, quadrupeds, and humanoids.

This workflow builds on NVIDIA Applied Research efforts presented in X-MOBILITY: End-To-End Generalizable Navigation via World Modeling.

GR00T-Control for whole-body control

GR00T-Control is a suite of advanced motion planning and control libraries, models, policies and reference workflows to develop WBC. Reference workflows exercise various platforms, pretrained models and accelerated libraries.

To achieve precise and responsive humanoid robot control, especially in tasks requiring dexterity and locomotion, WBC is essential. GR00T-Control introduces a learning-based alternative to traditional model predictive control (MPC) with workflow integrated with Isaac Lab developed by the NVIDIA Applied Research team. This work is based on the original research work presented in OmniH2O: Universal and Dexterous Human-to-Humanoid Whole-Body Teleoperation and Learning, as well as the newly released HOVER: Versatile Neural Whole-Body Controller for Humanoid Robots.

This reference workflow enables the development of whole-body humanoid control policies (WBC Policy) for both teleoperation and autonomous control. For OmniH2O’s teleoperation, input methods such as VR headsets, RGB cameras, and verbal commands allow for high-precision human control. Meanwhile, HOVER’s multimode policy distillation framework facilitates seamless transitions between autonomous task modes, making it adaptable for complex tasks

The WBC Policy workflow uses a sim-to-real learning pipeline. It starts by training a privileged control policy in simulation with reinforcement learning using Isaac Lab, which serves as the “teacher” model with access to detailed motion data. This model is then distilled into a deployable real-world version capable of operating with limited sensory input, addressing challenges like teleoperation delays, restricted inputs from VR or visual tracking (for OmniH2O) and adaptability across multiple autonomous task modes (for HOVER).

A whole-body control policy (developed using the OmniH2O workflow) provides 19 degrees of freedom for precise humanoid robot control.

GR00T-Control offers roboticists tools for further exploration of learning-based WBC for humanoids.

GR00T-Perception for multimodal sensing

GR00T-Perception is a suite of advanced perception libraries (such as nvblox and cuVSLAM), foundation models (such as FoundationPose and RT-DETR), and reference workflows built on Isaac Sim and NVIDIA Isaac ROS. The reference workflows showcase how to use these platforms, pretrained models and accelerated libraries together in robotics solutions.

A key new addition to GR00T-Perception is ReMEmbR, an applied research reference workflow that enhances human-robot interaction by enabling robots to “remember” long histories of events, significantly improving personalized and context-aware responses. This workflow integrates vision language models, LLMs, and retrieval-augmented memory to boost perception, cognition, and adaptability in humanoid robots.

ReMEmbR enables robots to retain contextual information over time, improving spatial awareness, navigation, and interaction efficiency by integrating sensory data like images and sounds. It follows a structured memory-building and querying process and can be deployed on NVIDIA Jetson AGX Orin on real robots.

To learn more about ReMEmbR, see Using Generative AI to Enable Robots to Reason and Act with ReMEmbR.

Conclusion

With NVIDIA Project GR00T we are building advanced technology, tools, and GR00T workflows that can be used standalone or together based on humanoid robot developer needs. These enhancements contribute to the development of more intelligent, adaptive, and capable humanoid robots, pushing the boundaries of what these robots can achieve in real-world applications.

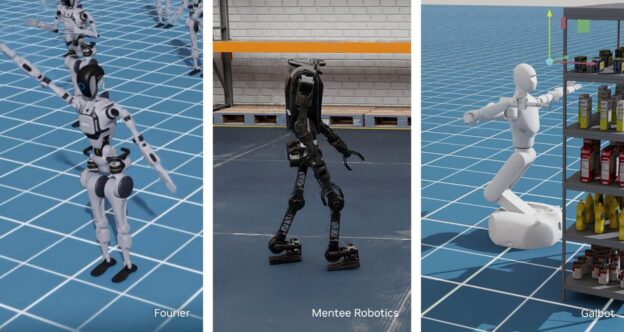

Leading robotics companies 1X, Agility Robotics, The AI Institute, Berkeley Humanoid, Boston Dynamics, Field AI, Fourier, Galbot, Mentee Robotics, Skild AI, Swiss-Mile, Unitree Robotics, and XPENG Robotics.

Learn more about NVIDIA at CoRL 2024, including 21 papers and nine workshops related to robot learning, released training and workflow guides for developers.

Get started now

Get started with Isaac Lab, or migrate from Isaac Gym to Isaac Lab with new developer onboarding guides and tutorials. Check out the Isaac Lab Reference Architecture to understand the end-to-end robot learning process with Isaac Lab and Isaac Sim.

Discover the latest in robot learning and simulation in the livestream on November 13, and don’t miss the NVIDIA Isaac Lab Office Hours for hands-on support and insights.

If you’re a humanoid robot company building software or hardware for the humanoid ecosystem, apply to join the NVIDIA Humanoid Robot Developer Program.

Acknowledgments

This work would not have been possible without the contributions of Chenran Li, Huihua Zhao, Jim Fan, Karl Van Wyk, Oyindamola Omotuyi, Shri Sundaram, Soha Pouya, Spencer Huang, Wei Liu, Yuke Zhu, and many others.

Advancing Humanoid Robot Sight and Skill Development with NVIDIA Project GR00T