NVIDIA’s GPU business is booming thanks to AI but it looks like the company may not be able to meet the demand as it tries to increase production at TSMC.

NVIDIA A100 & H100 GPUs Are So Hot Right Now Due To The AI Boom That The Company May Not Be Able To Keep Up With The Demand

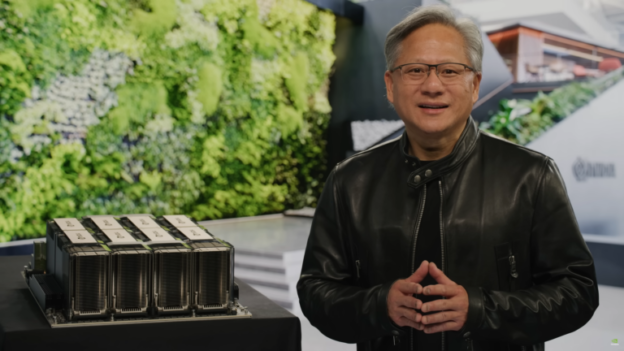

NVIDIA’s GPU business boom has been the result of countless years of betting on AI as the next big thing. With ChatGPT and other generative AI models, it looks like NVIDIA’s bets have paid off & the company is getting orders from all corners of the tech industry to get hands on its brand-new GPUs including the A100 and the H100.

Multiple reports are now suggesting that NVIDIA might not be able to keep up with the massive AI chip demand despite its best efforts. A recent report by DigiTimes suggests that as the green team sees a ramp-up in orders for its latest GPUs, the company has started to increase production of its chips at TSMC.

Nvidia has seen a ramp-up in orders for its A100 and H100 AI GPUs, leading to an increase in wafer starts at TSMC, according to market sources.

The AI boom brought on by ChatGPT has caused demand for high-computing GPUs to soar. Coupled with the US ban on AI chip sales to China, major Chinese companies like Baidu are buying up Nvidia’s AI GPUs.

The NVIDIA A100 utilizes the TSMC 7nm process node while the H100 which began shipping last May, is based on the TSMC 4N process node (an optimized version of the 5nm node designed for NVIDIA). Ever since its launch, the company has seen huge demand for its H100 & DGX H100 GPU-powered servers. Although NVIDIA has tried to keep up with the demand, the recent AI boom has taken a huge toll on the company’s effort to keep up with the demand.

In China, where the company is offering a modified version of its A100 and H100 GPUs to bypass the US sanctions, known as A800 and H800, these chips are being offered at prices 40% more than the original MSRP. China has such a huge demand for these AI GPUs right now that even the V100, a GPU launched in 2018 and the first with Tensor Core architecture, is priced at around 10,000 US or 69,000 RMB. The NVIDIA A800 is priced at around 252,000 RMB or $36,500 US.

The delivery cycle has also reportedly been affected as NVIDIA wants to cater to its non-Chinese tech partners first. As such, the delivery time in China which was around 3 months for NVIDIA’s GPUs has now been increased to three months and in some cases even higher. It is reported that some of the new orders may not be fulfilled until December which is a wait time of over 6 months.

In a previous report, we mentioned how the demand for HPC and AI GPUs from NVIDIA can disrupt the supply of gaming chips as the company wants to allocate more resources towards AI which it’s calling a revolution for the PC and tech industry. It was expected that the demand would sooner or later outstrip the supply but NVIDIA is working round the clock to make sure that it can still offer a decent supply of chips to its biggest partners who are willing to pay the extra price of securing the top AI chips in the world. NVIDIA has also been the major driving force behind ChatGPT & several thousands of its GPUs are powering the current and latest models.