This blog was written in collaboration with Chief Investment Strategist Dr. Charles Roberts.

The conversation around AI has evolved since the release of ChatGPT two years ago. Focus on chatbots like ChatGPT and Character.ai shifted first to those that retrieve data and analyze documents and then, after the rollouts of GPT-4 and multimodal AI, to those producing and analyzing media. While previous generations of AI could synthesize data to generate code or written language, they struggled to take actions that assist users with basic tasks. Now, new AI capabilities not only generate content but also act on behalf of users.

Meaningful progress in building automated systems that perform complicated tasks at higher levels of abstraction should free up human time and effort for opportunities that add even higher value. As a result, human action and decision making should become the ever-decreasing tip of an ever-increasing and more valuable iceberg.

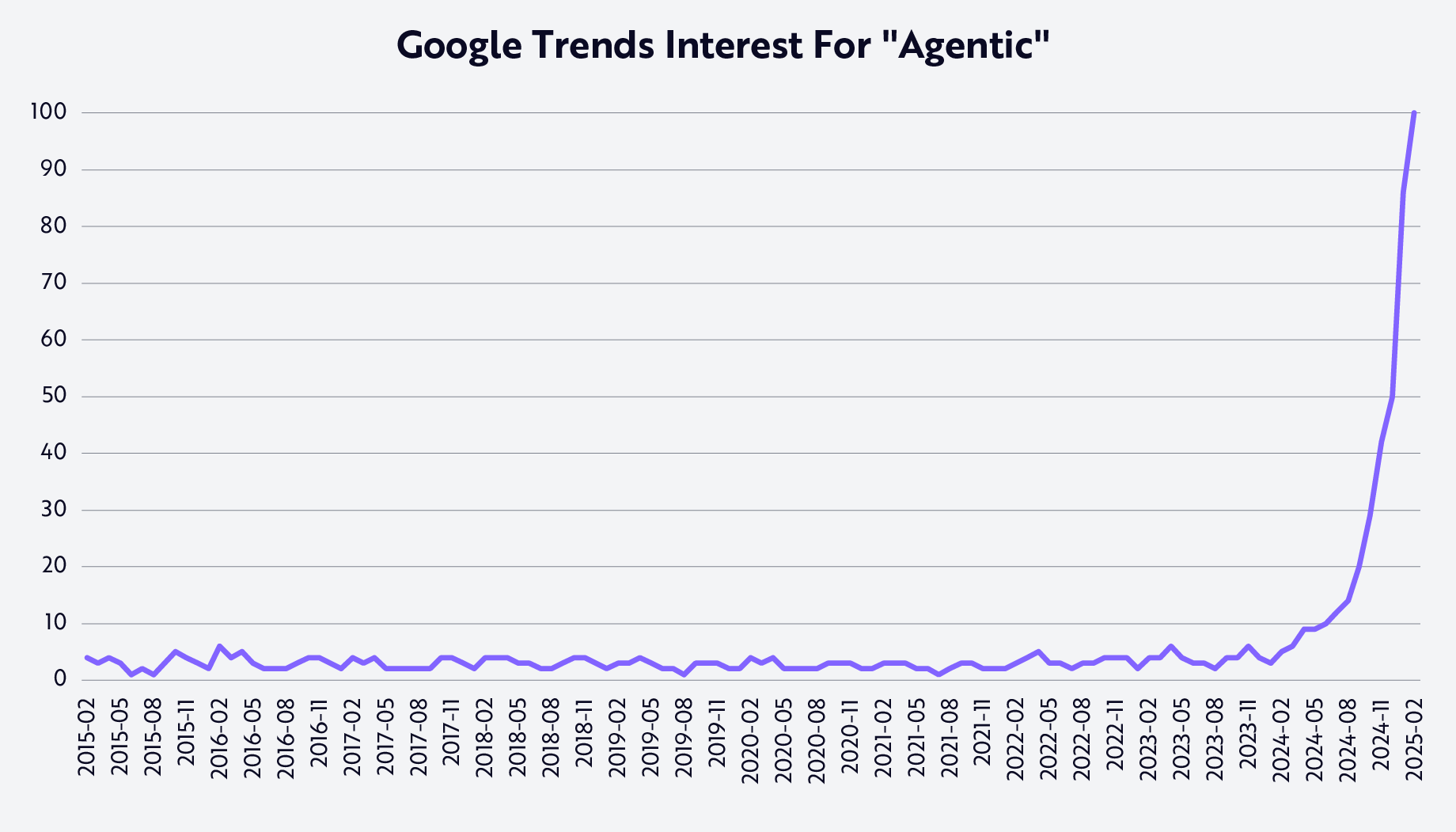

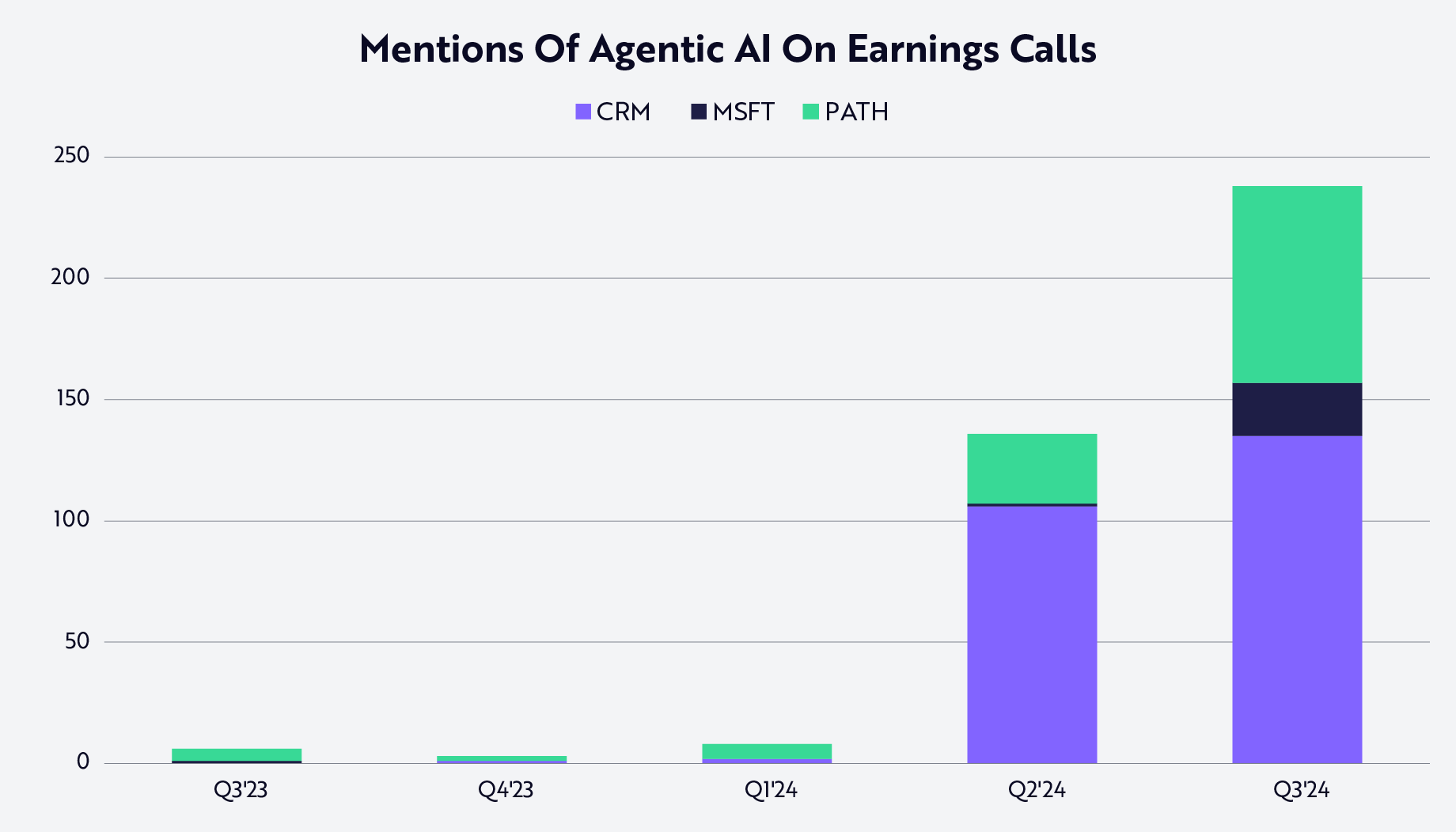

The productivity that many expect from autonomous AI agents has generated significant interest, as companies like Microsoft, Salesforce, and UiPath rebrand themselves as experts in building and orchestrating AI agents. Both search interest for agentic AI and mentions of “agents” or “agentic AI” on earnings calls have increased exponentially over the past four quarters, as shown in the two charts below. Moreover, the demand for AI agents suited for research, coding, and other business applications has created new companies like Sierra and Sakana and has launched new products from companies like Replit.

Google Trends data measure relative search interest over time, not absolute search volume, with 100 representing the maximum search interest for the given period. Source: ARK Investment Management LLC, 2025, based on data from Google Trends as of February 10, 2025. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security.

Source: ARK Investment Management LLC, 2025, based on data from Aiera as of December 5, 2024. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security.

Early examples of the benefits have bolstered interest in agentic AI. Klarna, currently a leader in buy-now-pay-later functionality, has deployed AI agents1 that resolve issues five times faster than its former human customer service agents, with a 25% drop in repeat inquiries.2 In 2024, its AI agents handled roughly two-thirds of Klarna’s customer service inquiries, performing the work equivalent to that of 700 full-time human agents while bolstering company profits by an estimated $40 million. In 2024, those improvements helped Klarna to reduce sales and marketing expenses and customer service and operations expenses by 16% and 14%, respectively, while achieving 23% year-on-year revenue growth.3

Among other compelling examples, Amazon is using AI coding agents to accelerate routine4 software application upgrades, and has saved 4,500 developer-years of work and ~$260 million at an annual rate,5 while Palantir has partnered with Anthropic to create a suite of 78 AI agents6 for a leading American insurer, reducing time spent on an underwriting process from two weeks to three hours.7

What is Agentic AI?

An AI agent is an application that not only reasons through a complex problem but also can create a structured problem-solving plan before using tools to execute its solution. While AI assistants have demonstrated impressive productivity gains in tasks like coding, added layers of organization and tool use can broaden an agent’s impact significantly. Andrew Ng, a leading AI researcher at Stanford, illustrated this idea during a talk hosted by the VC firm Sequoia Capital.8 Through a literature review comparing the performance of GPT-3.5 and GPT-4, Ng and his team found that when equipped with agentic frameworks or tools, the older and less performant GPT-3.5 model outperformed the newer and more powerful GPT-4 that did not benefit from agentic tools. Equipping GPT-4 with the same agentic tools boosted its performance considerably.

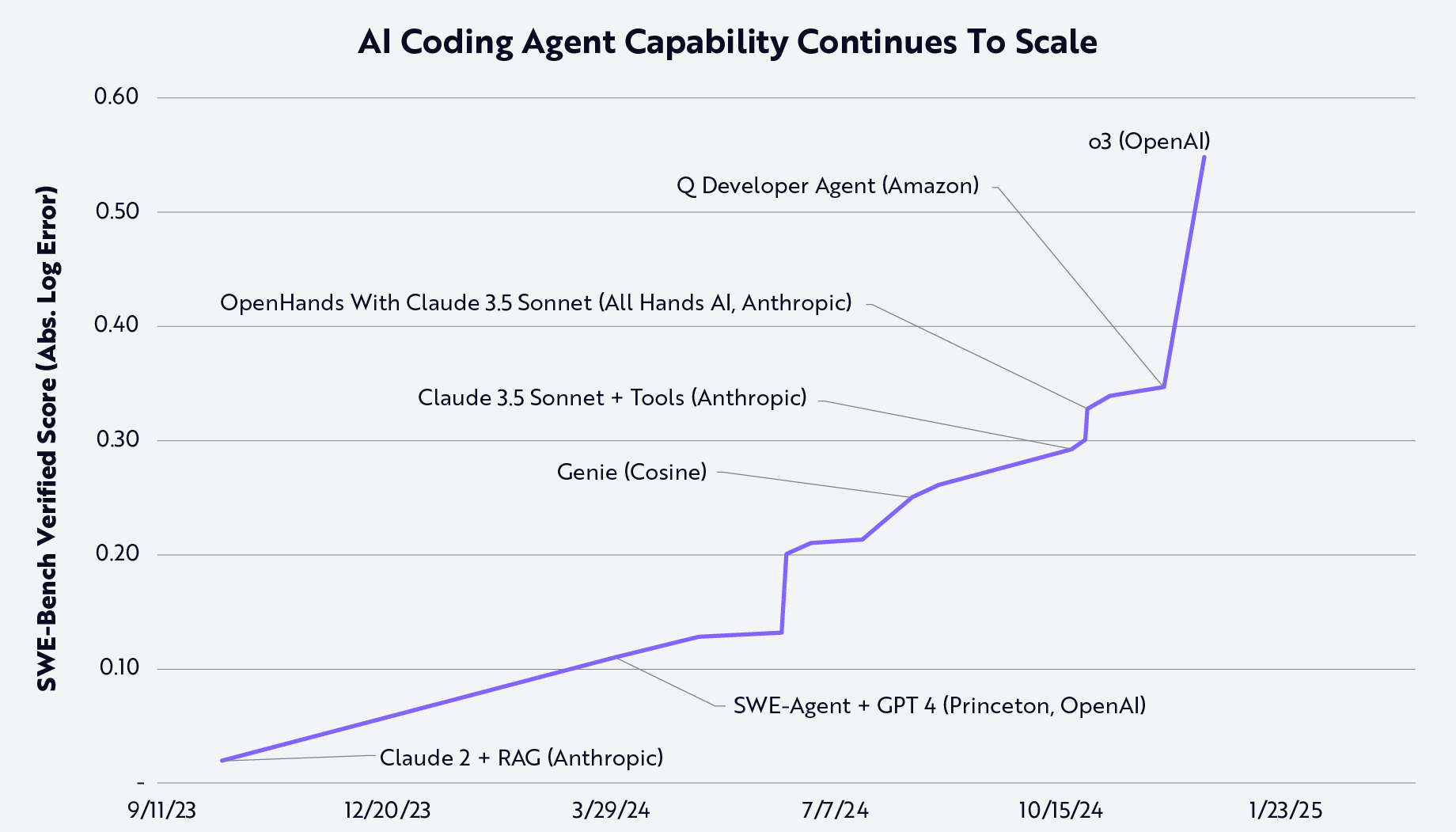

Among the new benchmarks assessing performance, SWE-bench9 has become one of the most important measuring an AI agent’s ability to write code autonomously. SWE-bench asks an AI agent to solve autonomously 2,294 coding issues in a collection of real-world GitHub code repositories, testing the model’s performance under conditions more realistic than academic tests like HumanEval. In an update, developed by the original research team in collaboration with OpenAI, SWE-bench Verified10 tracks a subset of 500 SWE-bench issues that human annotators confirm have clear, unambiguous measures with which to ascertain whether a problem has been solved.

Performance on SWE-bench Verified demonstrates both the progress of agentic performance over the past year and the room for improvement. In October 2023, the best performing model on SWE-bench Verified was Anthropic’s Claude 2; yet, it resolved only 4.4%11 of the issues. As of December 2024, OpenAI’s o3 was the best performing model with a score of 71.7%.12 The SWE-bench Verified scores for Claude 2, o3, and other models of interest are shown in the chart below, with scores based on a log-error adjusted scale to account for the increased incremental effort necessary to achieve higher scores.

Source: ARK Investment Management LLC, 2025, based on data from SWE-Bench and OpenAI as of December 31, 2024.13 For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

Salesforce released its own LLM Benchmark for CRM,14 which evaluates the suitability of large language models for handling sales and customer service tasks. The benchmark measures model accuracy, speed, cost, and safety. Larger models like Claude 3 Opus and smaller models like Claude 3 Haiku notched high scores in accuracy and speed, respectively, while GPT-4o mini performed well in several categories, especially cost. Importantly, the tradeoffs among accuracy, speed, cost, and safety suggest that users are likely to leverage several models, with no model dominating all use cases.

While both SWE-Bench Verified and Salesforce’s LLM Benchmark have shown significant improvements, as agentic capabilities evolve with new use cases, more benchmarks are likely to be created.

Potential Impacts On Customer Service: A Case Study

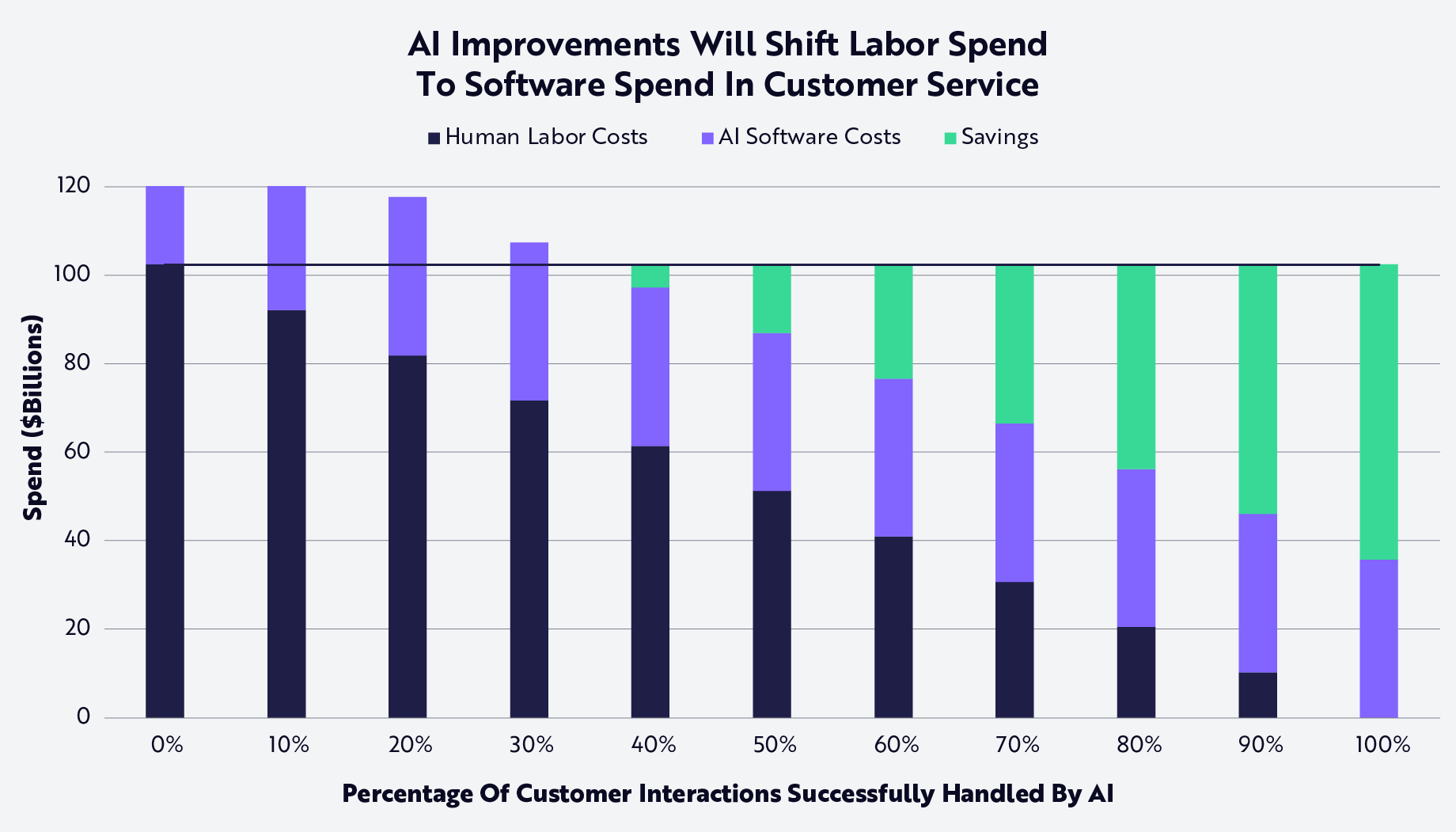

AI agents have found meaningful traction in customer service and support. Based on the number of human customer support agents in the United States—approximately 2.9 million,15 according to the Bureau of Labor Statistics—and an average wage of $17.91 per hour,16 the labor cost of customer support in the US is ~$102 billion each year. Assuming that each agent handles 50 calls or chats per day,17 the average cost per human-supported conversation would be ~$2.87.

In September 2024, however, Salesforce announced a customer service AI agent that handles incoming customer conversations at a starting cost of $2 per conversation, with discounts for enterprise adopters. As the cost of AI models continues to fall, that price should decline significantly. Even at a fixed cost of $1 per conversation, AI agents could offer enterprises material savings once they handle 35% of incoming customer requests, as illustrated below. At that point, the reduction in labor costs begins to offset the cost of AI software that screens incoming calls. Moreover, as the proficiency of AI agents increases, companies can reallocate more capital from labor to software, adding corporate savings to the enterprise over time, as shown in green below.

Source: ARK Investment Management LLC, 2025. This ARK analysis draws on a range of external data sources, including The U.S. Bureau of Labor Statistics and Salesforce, as of December 31, 2024, which may be provided upon request. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Forecasts are inherently limited and cannot be relied upon.

As the data above suggest, if an AI agent were to screen 50 incoming interactions per day per customer service worker in the US at a fixed price of $1 per conversation, software spend on AI customer support agents would rise to more than $35 billion. If the AI tool could handle 40% of incoming customer requests successfully, without escalating to a human agent, $35 billion in software spend would displace ~$40 billion of labor costs, saving the industry ~$5 billion per year. Those savings would increase more than seven-fold to ~$36 billion if the AI agent could handle 70% of requests, and to more than $56 billion at 90% of incoming requests.

While AI agents may not be capable of handling a high enough percent of customer interactions to pay for themselves yet, their potential to lower not only “onboarding” and hiring costs but also seat-based software costs, combined with their ability to scale during peak volumes, all create the potential for meaningful enterprise value creation.

While those estimates focus on the fixed costs of AI agents, our research also suggests that the cost per conversation will fall significantly over the coming months and years, as AI inference costs continue to fall more than 90% per year.18

If consumers were to gravitate toward AI customer support services that lower both wait times and error rates, demand could increase significantly which, along with lower AI inference costs, could be a win-win for enterprises. If the cost per conversation were to fall to ~$0.125 cents, and the number of conversations were to increase by 8x, AI could save enterprises more than $500 billion on human labor costs, as illustrated below.

.png)

Source: ARK Investment Management LLC, 2025. This ARK analysis draws on a range of external data sources, including The U.S. Bureau of Labor Statistics and Salesforce, as of December 31, 2024, which may be provided upon request. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Forecasts are inherently limited and cannot be relied upon.

What Does the Future Hold for Agentic AI?

As the quote often attributed to Niels Bohr goes, “It’s difficult to make predictions, especially about the future.” So it goes with agentic AI. That said, several variables will matter.

The first consideration is that agentic progress is likely to proceed at different rates depending on the problems being solved. As suggested in our “Starfish” model below, for example, the ability to confirm when an agent has been successful is critical to closing the loop of success and exploring why GenAI code generation has been a clear winner: the endpoints are clear-cut and can be ascertained rapidly and automatically. Does a programming function, for example, work or not?

Note: Progress in agentic AI will likely be most rapid in settings where successful outcomes can be verified easily. Regarding the chart on the right, commercial and research examples of each category include coding agents like Replit Agent and GitHub Agent Mode, customer service agents from Klarna/OpenAI and Salesforce, mathematics agents including Google Deepmind’s AlphaProof, Molecular/therapeutic design from Recursion Pharma, and Automated form filling agents from UiPath. Source: ARK Investment Management LLC, 2025. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security.

Another important variable in Agentic AI’s future is what will happen as the agentic “iceberg” becomes a larger part of the economy: what happens if one or a small handful of agents learns what is most successful in attracting other agents to transact with them, potentially spiraling into a feedback loop? In the extreme case, one imagines an exponential “agentic breakout” in which a large part of the economy concentrates rapidly into a small number of agents, perhaps even only one agent. The emergence of agent-dedicated languages19 seems to amplify that possibility.

AI Agent permutations seem open-ended and should have a significant economic impact. The customer service case study above highlights the potential software spend and savings generated by AI agents in a US industry that spends ~$100 billion per year on wages today. If the capabilities of AI Agents continue to scale, much of the estimated $20 trillion20 spent globally per year on knowledge work could be automated or augmented by agents, shifting traditional labor costs to AI software and the supporting platforms and infrastructure.