Jensen Huang claims Nvidia’s new Grace Hopper Superchip, which combines a CPU and GPU on an integrated module, can cut down on energy costs while providing much faster performance for generative AI workloads in comparison to traditional CPUs. He says the chip can also help firms save significant money on capital costs to achieve the same baseline performance as CPUs.

Nvidia CEO Jensen Huang has a mantra that he has uttered enough times that it almost became a joke during his SIGGRAPH 2023 keynote last week: “the more you buy, the more you save.”

Huang was referring to the GPU giant’s assertion that its AI chips can save companies significant money on costs compared to traditional CPUs for what he views as the future: data centers, fueled by demand for generative AI capabilities, relying on large language models (LLMs) to answer user queries and generate content for a wide range of applications.

[Related: 7 Big Announcements Nvidia Made At SIGGRAPH 2023: New AI Chips, Software And More ]

“The canonical use case of the future is a large language model on the front end of just about everything: every single application, every single database, whenever you interact with a computer, you’ll likely be first engaged in a large language model,” he said.

Nvidia’s AI chips can save money for operators of data centers focused on large language models and other compute-intensive workloads, according to Huang’s argument, because the chips run much faster and more efficiently than CPUs, and the more chips they buy, the more those savings increase.

“This is the reason why accelerated computing is going to be the path forward. And this is the reason why the world’s data centers are very quickly transitioning to accelerated computing,” Huang said.

“And some people say—and you guys might have heard, I don’t know who said it—but the more you buy, the more you save. And that’s wisdom,” he added, as industry enthusiasts chuckled along.

But despite the jokey atmosphere, Huang was serious about the underlying claims, stressing that these points should be the main takeaways from his keynote.

Saving On Energy And Capital Costs With AI Chips

To illustrate the benefits of Nvidia’s chips in data centers, Huang gave a couple examples comparing them to CPUs, one focused on how they can reduce a data center’s overall power budget while improving performance and the other on how the chips can significantly reduce capital costs.

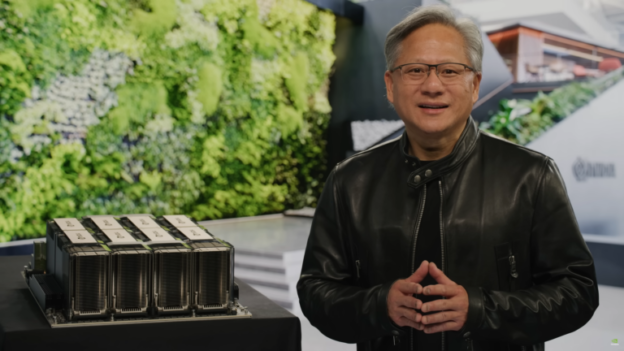

Huang’s examples were based around the company’s most advanced AI chip yet, the Grace Hopper Superchip, which combines a 72-core Grace CPU and a Hopper H100 GPU connected with a high-bandwidth chip-to-chip interconnect as well as 480GB of LPDDR5x memory and, in the case of an updated version coming next year, 141GB of HBM3e high-bandwidth memory.

In the power budget example, Huang offered a hypothethical data center with a $100 million budget that was running what he viewed as a representative group of workloads for generative AI use cases: Meta’s Llama 2 LLM used in conjunction with a vector database and the Stable Diffusion XL LLM.

The data center operator could use $100 million to buy 8,800 of Intel’s Xeon Platinum 8480+ CPU, one the fastest and highest-core-count processors from its latest server chip lineup, and those processors would give the data center a power budget of 5 megawatts, Huang said.

By contrast, $100 million could also buy 2,500 Grace Hopper Superchips and reduce the data center’s power budget to 3 megawatts. Plus, the data center would be able to run inference on the LLM and database workloads 12 times faster than the CPU-only configuration, according to Huang.

That translates into a 20-fold improvement in energy efficiency, he added, and it would take a “very long time” for a traditional chipmaker to achieve that level of improvement following Moore’s law, the observation championed by Intel that the number of transistors in an integrated circuit would double every two years, bringing about boosts in performance and efficiency.

“This is a giant step up in efficiency and throughput,” Huang said.

In the other example, Huang took the same $100 million data center with 8,800 Intel Xeon CPUs and said if a data center operator wanted to achieve the same performance with Grace Hopper Superchips, it would only cost $8 million and a power budget of 260 kilowatts.

“So 20 times less power and 12 times less cost,” he said.

Partners Say Nvidia’s Mantra Is Right On

Two executives at top Nvidia partners told CRN that their companies have proven out that customers can improve performance and save money in many instances for compute-heavy applications when they switch from a data center infrastructure based around CPUs to one that is driven by GPUs.

“We’ve spent the last five to 10 years getting folks to go from CPU to using the GPU, which has exponentially sped up these jobs and collapses costs,” said Andy Lin, CTO at Houston, Texas-based Mark III Systems, which was named Nvidia’s top North American health care partner this year.

Lin said he’s excited by the possibilities of how a chip like Grace Hopper could improve performance and efficiency further by creating memory coherency between the CPU and GPU in addition to removing unnecessary features that would be found in a general-purpose x86 CPU.

“When you have something purpose-built for these types of workloads, the economics tend to be better because they’re designed specifically for that versus having a lot of waste in general purpose x86 CPU where they’ve got stuff that has to account for all possibilities, not just your deep learning jobs, not just your machine learning or your accelerator-enabled software,” he said.

Brett Newman, vice president of marketing and customer engagement for high-performance computing and AI at Plymouth, Mass.-based Microway, said most of his customers often take advantage of savings they can achieve in both capital costs and energy when moving workloads from the CPU to the GPU.

“The most frequent outcome is really a blend. They’ll say, ‘Great, I’m going to [use] some of the savings that I might have had through accelerated computing to [get] an overall improvement in performance. But I’m also going to take the power and energy efficiency savings as well,’” he said.

This is a consistent theme Newman has seen ever since Nvidia pioneered GPU computing several years ago to accelerate scientific computing workloads.

Now with many organizations developing generative AI applications to meet high industry demand, there is a greater need to squeeze out as much performance as possible from GPUs, he added.

“They have this constant appetite for computing, and they’ll take any computational horsepower advances they can get. Sometimes saving on the power budget is lovely, but sometimes they’ll go, ‘Great, I then want to run the next application that is more computationally hungry too,” Newman said.

Intel, AMD Ramp Up Competition With New AI Chips

What Huang didn’t mention during his SIGGRAPH keynote is that while Intel is trying to promote its CPUs for some AI workloads, the larger rival is making a major push to sell powerful AI chips that compete with Nvidia’s GPUs. This includes the Gaudi deep learning processors from its Habana business and data center GPUs, the most powerful of which launched earlier this year.

With Nvidia’s “incredible products and road maps” in mind, Newman said, he doesn’t think Intel will become more competitive until the semiconductor giant merges its AI chip road maps and incorporates its Gaudi chip technology into future GPUs, starting with Falcon Shores in 2025.

“It’s a question of when things will come to market and what are they like when they do,” he said.

When it comes to Nvidia’s other major rival, AMD, Newman believes the competing chip designer could “have a lot of the right things at the right time” when it launches its Instinct MI300 chips later this year.

“Right now, it feels like everyone wants a piece of the pie. And the pie is growing so much that there might be enough for everyone to have a really healthy businesses out of it,” he said.

https://www.crn.com/news/components-peripherals/nvidia-ceo-explains-how-ai-chips-could-save-future-data-centers-lots-of-money