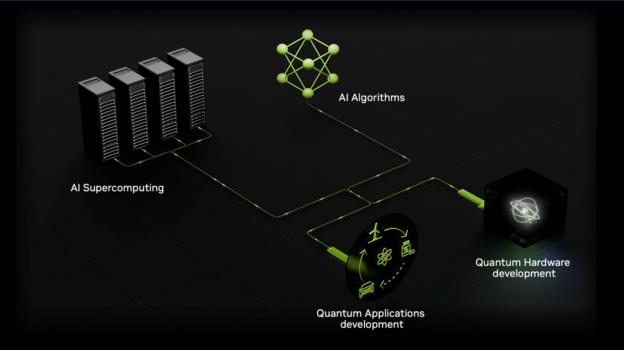

NVIDIA’s vision of accelerated quantum supercomputers integrates quantum hardware and AI supercomputing to turn today’s quantum processors into tomorrow’s useful quantum computing devices.

At Supercomputing 2024 (SC24), NVIDIA announced a wave of projects with partners that are driving the quantum ecosystem through those challenges standing between today’s technologies and this accelerated quantum supercomputing. This post highlights these projects, which span quantum hardware, algorithms, and system integrations.

AI breakthroughs meet quantum computing

Generative AI is one of the most promising tools for addressing the challenges facing quantum computing. NVIDIA has partnered with scientists from industry and academia to release the new review research paper, Artificial Intelligence for Quantum Computing, outlining how AI is set to change quantum computing (Figure 1).

Focusing on topics such as using GPT models to synthesize quantum circuits, and transformers to decode QEC codes, the paper surveys critical work occurring at the intersection of two of computing’s most transformational disciplines.

Multi-QPU, multi-GPU, multi-user

Poznan Supercomputing and Networking Center (PSNC) and ORCA Computing announced results from an AI for quantum collaboration with NVIDIA. The team demonstrated the first fully functional multi-QPU, multi-GPU, multi-user infrastructure by leveraging NVIDIA H100 Tensor Core GPUs, the NVIDIA CUDA-Q platform, and two ORCA PT-1 photonic quantum computers.

The CUDA-Q platform was used to develop and run a novel Resource State Generator with Pretrained Transformers (RS-GPT) algorithm that uses AI to improve the design of photonic quantum processors. The collaboration also produced a hybrid quantum-classical generative adversarial network (GAN) workflow for recognizing human facial images. Additionally, a hybrid quantum neural network for biological image classification was developed, targeting medical diagnostic applications.

Integrating quantum with classical

A number of announcements at SC24 demonstrate how CUDA-Q continues to integrate with quantum hardware providers of all qubit modalities and enable greater accessibility to accelerated quantum supercomputing.

QuEra, Anyon, and Fermioniq now available in CUDA-Q

The qubit-agnostic CUDA-Q development platform is now integrated with three new partners: Anyon (superconducting QPU), Fermioniq (quantum circuit emulator), and QuEra (neutral atom QPU). With the CUDA-Q quantum-classical workflow, quantum developers can now seamlessly weave these resources into hybrid algorithms.

Quantum Brilliance integrates with German supercomputing

Quantum Brilliance announced the upcoming integration of their diamond-based QPU into the Fraunhofer Institute for Applied Solid State Physics IAF’s existing supercomputing infrastructure. The system is compatible with CUDA-Q, which could be used to develop and test applications on this new hybrid quantum classical supercomputing system.

Advancing quantum hardware design

The CUDA-Q platform now enables quantum hardware developers to leverage AI supercomputing for accelerating the design process.

Dynamics simulations in CUDA-Q 0.9

CUDA-Q 0.9 includes new dynamical simulation capabilities, able to perform high-accuracy and scalable dynamical simulations of quantum systems. These simulations are a key tool for QPU vendors to understand the physics of their hardware and improve their qubit designs. Dynamics simulations in CUDA-Q can take advantage of built-in solvers and now run directly on QuEra’s analog quantum processor.

Enabling Google to build better qubits

NVIDIA is also partnering with Google Quantum AI to perform large-scale, high-accuracy quantum dynamics simulations of their transmon qubits. This work is being performed using the new dynamics APIs now available in cuQuantum and CUDA-Q. To learn more about the breakthrough 40-qubit Google simulation data and other detailed benchmarks, see Accelerating Google’s QPU Development with New Dynamics Capabilities.

Broadening access to next-generation algorithm design

CUDA-QX is a collection of application-specific libraries optimized for GPU acceleration. Providing a higher level interface to the power of CUDA-Q, these libraries streamline researchers’ abilities to get started on exploring next-generation topics in quantum computing.

The CUDA-Q QEC (quantum error correction) library provides a number of built-in QEC codes, which can be used to build custom workflows and test the performance of fault tolerant algorithms.

The CUDA-Q Solvers library provides black box quantum solvers and preprocessing capabilities, making it almost trivial to build simulations of applications like chemical simulations that can run at scales only possible by leveraging GPU supercomputing.

To learn more about each library including example applications, see Introducing NVIDIA CUDA-QX Libraries for Accelerated Quantum Supercomputing.

Collaborating toward breakthrough applications

NVIDIA is partnering with expert developers and researchers across the quantum ecosystem. Highlights from a number of collaborations announced at SC24 include the following.

Quantum transformers with Yale University

Yale University and NVIDIA are collaborating to develop a novel quantum transformer model to generate new molecules with specific physicochemical properties. The team used CUDA-Q and its MQPU backend to develop a quantum transformer model that implements the attention mechanism in a transformer with quantum circuits, offering a new approach to capture complex molecular interactions directly within sequence modeling. CUDA-Q acceleration reduced the epoch training time from over a week (on CPU) to just hours (on a four-GPU system) (Figure 2).

Using multiple QPUs and NVIDIA A100 GPUs at the Perlmutter supercomputer, this enabled the first example of a quantum model trained with the full QM9 small molecule training set and a batch size of 256, allowing better comparison with classical machine learning (ML) models to assess the viability of quantum transformer models.

Classifying binding affinity with Moderna

Classical ML models, including deep neural networks (DNNs), can effectively identify binding motifs from protein sequences that are essential for understanding health and disease. Yet, as these models scale, training becomes computationally challenging, constraining their capacity to address increasingly intricate biological data.

Quantum neural networks (QNNs) offer a compelling, accurate, and efficient alternative, seeking to use a QPUs high-dimensional computational space to create more expressive ML models. But training such models is extremely difficult.

One approach to mitigate these difficulties is using quantum extreme learning machines (QELMs), which consist of an untrained QNN combined with a simple classical output layer. Figure 3 shows a schematic of a QELM model architecture adapted from Potential and Limitations of Quantum Extreme Learning Machines.

Using the CUDA-Q platform, NVIDIA and Moderna are developing a QELM workflow to predict biomolecule binding affinity. CUDA-Q accelerated simulations enable leveraging the large qubit numbers needed to see improvements in QELM test set accuracies. Future work will make use of new CUDA-Q dynamics capabilities to test the performance of QELM methods under realistic noise conditions.

Accelerating parallel circuit knitting with Hewlett Packard Enterprise

One approach to scaling quantum applications is to parallelize quantum circuits across multiple processors and then combine results through a procedure known as circuit knitting. NVIDIA is working with Hewlett Packard Enterprise (HPE) on a high-performance implementation of a tensor network-based technique for improving the accuracy and efficiency of adaptive circuit knitting (Figure 4). Early results from HPE show great promise for it to outperform the sampling efficiency of existing circuit knitting approaches by one or two orders of magnitude.

Tensor networks for noise mitigation with Algorithmiq

Quantum error mitigation postprocesses the output of noisy quantum computers to improve results. NVIDIA is working with Algorithmiq to accelerate their tensor network error mitigation (TEM) techniques, with early tests demonstrating 300x speedups over previous CPU implementations. Algorithmiq plans to showcase accelerated TEM applications in the coming months, ranging from materials science to cybersecurity.

Educating on quantum algorithms

NVIDIA is working with universities, including Arizona State and Carnegie Mellon, to develop CUDA-Q Academic course modules. These modules will build on the success of the previously released Divide and Conquer MaxCut QAOA and Quick Start to Quantum Computing modules, focusing on how to leverage GPUs for accelerating hybrid applications through CUDA-Q.

Get started

NVIDIA is working to drive the quantum ecosystem forward by partnering on projects spanning the application of AI to enable quantum computing, quantum hardware simulation, hybrid quantum-classical application development, and system integrations. Learn more about NVIDIA quantum computing and download CUDA-Q to start experimenting with accelerated quantum supercomputing for your own projects.

Related resources

- GTC session: AI Supercomputing: Pioneering the Future of Computational Science

- GTC session: Global Innovators: Scaling Innovation With NVIDIA AI

- GTC session: Insights from NVIDIA Research

- SDK: CUDA Q

- SDK: MONAI Cloud API

- Webinar: Bringing Drugs to Clinics Faster with NVIDIA Computing

NVIDIA Partners Accelerate Quantum Breakthroughs with AI Supercomputing