“We’re going to see AI go into enterprise, which is on-prem, because so much of the data is still on-prem,” CEO Jensen Huang said during a Wednesday earnings call.

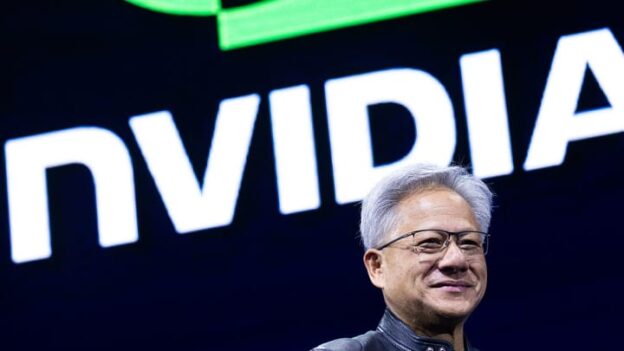

- Nvidia is gearing up to supply enterprise customers with AI processing power amid a rush to deploy agentic tools, the company’s CEO Jensen Huang said Wednesday, during a Q1 2026 earnings call for the three-month period ending April 27.

- “It’s really hard to move every company’s data into the cloud, so we’re going to move AI into the enterprise,” Huang said. “We’re going to see AI go into enterprise, which is on-prem, because so much of the data is still on-prem.”

- The GPU giant saw quarterly revenue increase 69% year over year to $44.1 billion as AI usage levels spiked. “AI workloads have transitioned strongly to inference, and AI factory buildouts are driving significant revenue,” Nvidia EVP and CFO Colette Kress said during the earnings call.

Dive Insight:

Nvidia’s fortunes soared in the last two-and-a-half years, driven by massive tech sector investments in AI-optimized data center infrastructure to train and deploy large language models. While hyperscaler hunger for GPUs remains robust, the company is betting on the enterprise market to pick up momentum.

Large cloud providers installed an average of roughly 72,000 Nvidia Blackwell GPUs per week during the quarter and are on track to increase consumption, according to Kress. “Microsoft, for example, has already deployed tens of thousands of Blackwell GPUs and is expected to ramp up to hundreds of thousands of GB200s with OpenAI as one of its key customers,” Kress said.

Nvidia revenues soared to $44.1B in two-and-a-half years

The GB200 Grace Blackwell Superchip, released a year ago, is a high-capacity processor that powers a larger rack designed to handle the most compute-intensive AI workloads, such as model training. In March, Nvidia unveiled its successor, the more powerful GB300 NVL72 rack system.

Cloud providers began sampling the new processors earlier this month and Nvidia expects shipments to commence later this quarter, Kress said.

As its footprint among hyperscalers continued to expand, Nvidia added to its enterprise product portfolio and forged deeper enterprise partnerships. The company rolled out a line of GPU-powered laptops and workstations in May, turning to its PC manufacturing partners to deliver enterprise customers.

“Enterprise AI is just taking off,” Huang said Wednesday, pointing to the new line of on-premises AI hardware. Kress touted an AI development partnership the company inked with Yum Brands in March.

Nvidia will help the corporate parent of KFC, Pizza Hut and Taco Bell deploy AI in 500 restaurants this year and 61,000 locations over time to “streamline order-taking, optimize operations and enhance service,” Kress said.

The initiative represents a step up into the AI big leagues for Yum Brands. The company worked with an unnamed AI startup to create a Taco Bell drive-thru chatbot last year. It also marked Nvidia’s first foray into the restaurant business, according to the announcement.

Yum used Nvidia technology to power its proprietary Byte by Yum platform and enable AI voice agents, computer vision tools and performance analytics capabilities.

“Enterprise AI must be deployable on-prem and integrated with existing IT,” Huang said. “It’s compute, storage and networking. We’ve put all three of them together finally, and we’re going to market with that.”

https://www.ciodive.com/news/nvidia-enterprise-ai-yum-brands-hyperscalers/749340/