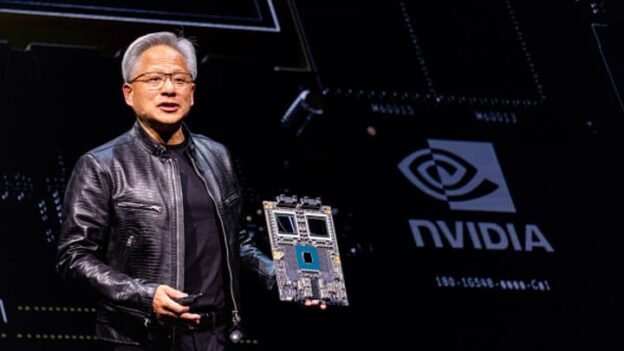

- Nvidia announced it is contributing its Blackwell rack design to OCP

- The move is a boon for Nvidia, but can also help others understand how it solved key design challenges

- Racks may be one thing, but openness for AI servers is still lacking

Liquid cooling, rack designs and chiplets, oh my! A flood of announcements are coming out of the Open Compute Project’s Global Summit this week, among these the news that Nvidia is pushing its Blackwell rack and networking designs to the open source community. But does this even matter or is it just good press?

We took that question to analysts, who told us it’s actually a little bit of both.

Nvidia is contributing two key things to OCP: its GB200 NVL72 system electro-mechanical design, including information on rack architecture, compute and switch tray mechanicals and liquid-cooling and thermal environment specifications; and key details about its NVLink networking cartridges, including volumetrics and precise mounting locations. Both of these are key for enabling high-density GPU deployments for artificial intelligence use cases.

Jack Gold, founder and principal analyst at J.Gold Associates, said of course Nvidia is going to promote (by open sourcing) what it thinks is most important based on its own needs. Doing so allows it to reach a wider audience and enables vendors to pick up its reference designs.

Sharing is caring

But if you dig a little deeper, both Gold and Dell’Oro Group Research Director Lucas Beran said Nvidia’s move can actually help the ecosystem at large.

“Anytime you can offer open reference designs to the community so they don’t have to reinvent the wheel is a good thing,” Gold said. He noted, for example, that Intel did reference designs for PCs, which helped stimulate activity from vendors.

Beran added that open-sourcing designs like Nvidia’s helps hyperscalers build supply chains around specific solutions, which can drive down costs.

“Given that accelerated computing infrastructure with higher rack densities at scale is still new for many end-users, I’d expect these announcements to be supportive of accelerated computing adoption from a wide range of end users, but still primarily supportive of those contributing to the designs,” he told Fierce.

And digging one more layer down, Beran said Nvidia’s move “signals that some maturation is starting to occur in how end-users are bringing these solutions together and what designs they may start to scale around.”

“Open-sourcing these solutions, or at least some aspects of them may provide insight into how they are overcoming specific challenges,” he added. “This may help other end-users understand how they can overcome similar challenges.”

Limits of openness

Still, openness in the AI ecosystem has its limits. Racks and networking are one thing. Servers are a whole different story.

Dell’Oror Senior Research Director Baron Fung told Fierce “I think for AI, especially from the standpoint of servers, we haven’t seen much openness.” He noted that hyperscalers are mostly building custom servers for AI and Nvidia’s AI server architecture is also mostly proprietary.

That said, it’s not clear that open sourcing server designs would have the same kind of benefit as doing so for other hardware.

“Given that the roadmap for AI tends to be much shorter than that of traditional computing (with new accelerators/GPUs every two years), an open standard such as OCP may not necessarily aid in reducing the time-to-market of new products to the market,” Fung concluded.

https://www.fierce-network.com/cloud/heres-what-nvidias-ocp-contributions-tell-us-about-ais-progress