The pace of technology innovation has accelerated in the past year, most dramatically in AI. And in 2024, there was no better place to be a part of creating those breakthroughs than NVIDIA Research.

NVIDIA Research is comprised of hundreds of extremely bright people pushing the frontiers of knowledge, not just in AI, but across many areas of technology.

In the past year, NVIDIA Research laid the groundwork for future improvements in GPU performance with major research discoveries in circuits, memory architecture and sparse arithmetic. The team’s invention of novel graphics techniques continues to raise the bar for real-time rendering. And we developed new methods for improving the efficiency of AI — requiring less energy, taking fewer GPU cycles and delivering even better results.

But the most exciting developments of the year have been in generative AI.

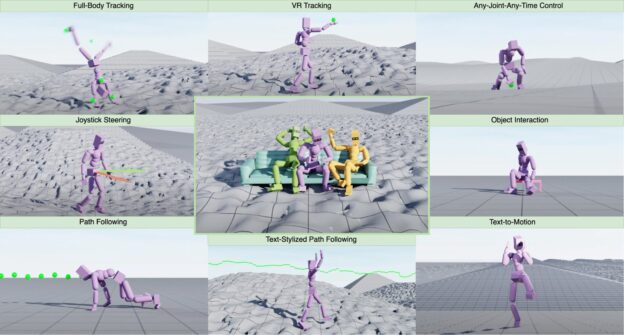

We’re now able to generate, not just images and text, but 3D models, music and sounds. We’re also developing better control over what is generated: to generate realistic humanoid motion and to generate sequences of images with consistent subjects.

The application of generative AI to science has resulted in high-resolution weather forecasts that are more accurate than conventional numerical weather models. AI models have given us the ability to accurately predict how blood glucose levels respond to different foods. Embodied generative AI is being used to develop autonomous vehicles and robots.

And that was just this year. What follows is a deeper dive into some of NVIDIA Research’s greatest generative AI work in 2024. Of course, we continue to develop new models and methods for AI, and expect even more exciting results next year.

ConsiStory: AI-Generated Images With Main Character Energy

ConsiStory, a collaboration between researchers at NVIDIA and Tel Aviv University, makes it easier to generate multiple images with a consistent main character — an essential capability for storytelling use cases such as illustrating a comic strip or developing a storyboard.

The researchers’ approach introduced a technique called subject-driven shared attention, which reduces the time it takes to generate consistent imagery from 13 minutes to around 30 seconds.

https://blogs.nvidia.com/blog/ai-research-2024/